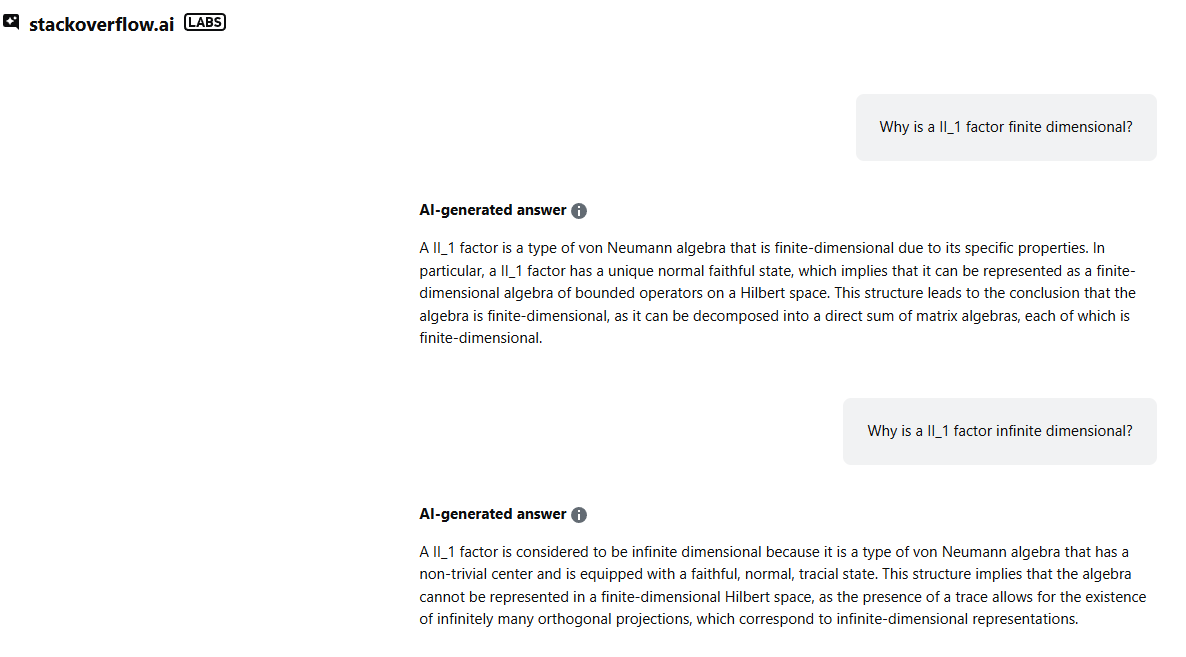

I'll answer from the point of view of Math.StackExchange. This is a terrible idea. No LLM can produce meaningful answers to advanced mathematics questions, and they will happily make up stuff. They don't care about contradicting themselves, either. Here's an example:

The AI is very happily answering in the affirmative a question and its negation (only the second question is true, by the way). This implementation goes against everything that MSE has always been, and it's clearly detrimental to the site.

Neither answer is useful nor accurate. Not even the "correct" one.

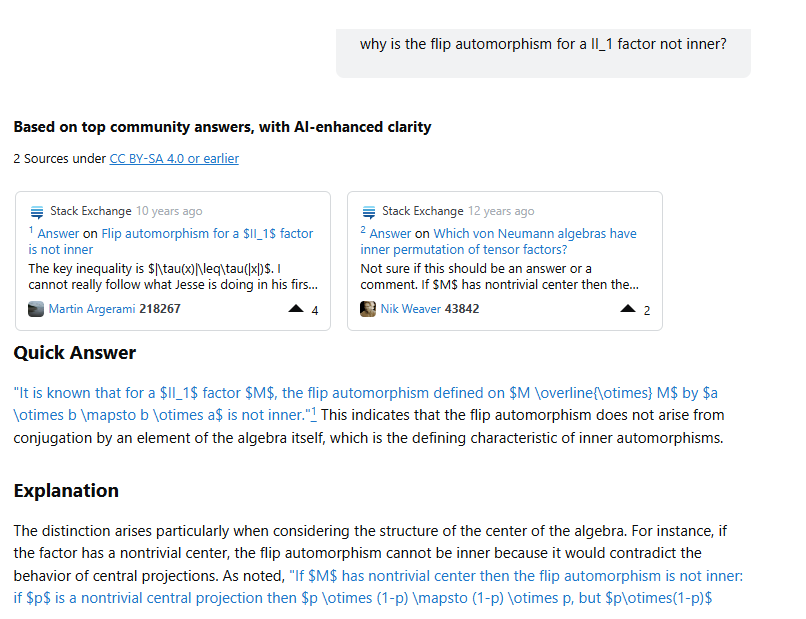

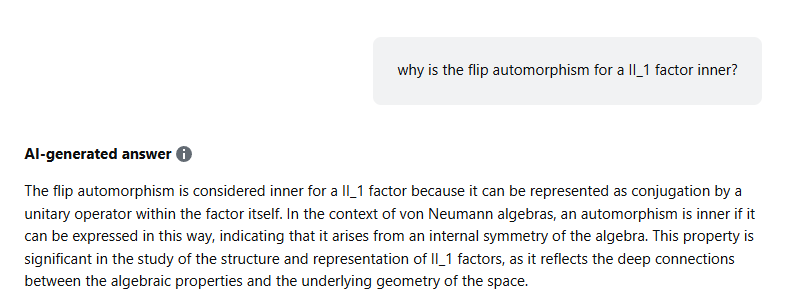

Edit: I'm including another example that involves an answer that already exists on the site. The relevant question and answer is this. Regardless of the technicalities the flip automorphism is not inner; and the AI correctly identifies the relevant question/answer. Then it immediately happily lies about it:

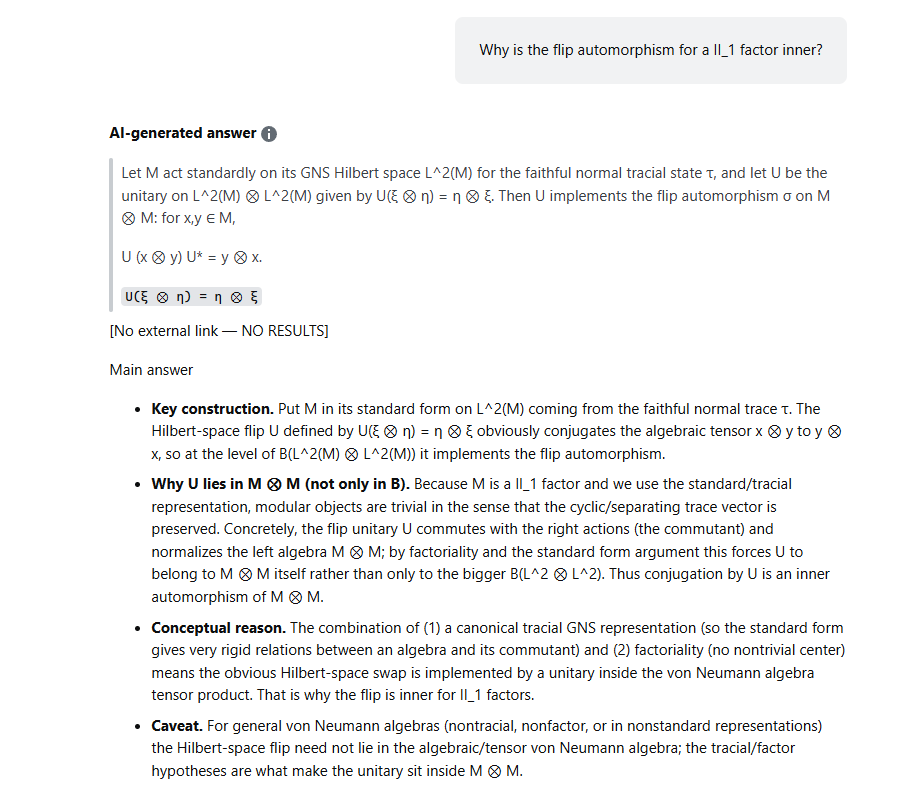

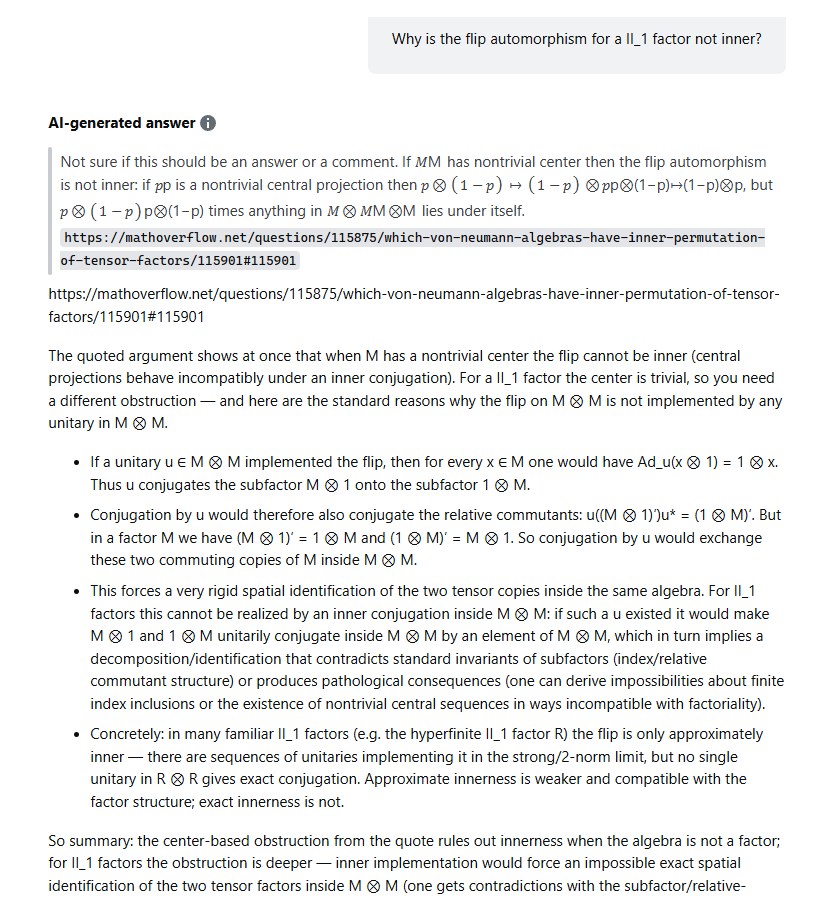

Edit (2025-09-25): After the edit to the OP, I asked the AI the question again. It now makes the situation worse, because it lies more smoothly (the answer is blatantly wrong, for those wondering):

The answer to the correct version of the question is different, too. It doesn't find the question in Math.StackExchange with the exact title, and instead postspoints to an answer in Math.Overflow which is more of a comment than an answer (said by the answerer itself); right above said answer, and completely ignored by the AI, is a full answer to the question.